Tap water isn’t drinkable. Power outages are common. The national average annual wage is $2,200. Yet rising on Jakarta’s outskirts are giant, windowless buildings packed inside with Nvidia’s latest artificial-intelligence chips. They mark Indonesia’s surprising rise as an AI hot spot, a market estimated to grow 30% annually over the next five years to $2.4 billion.

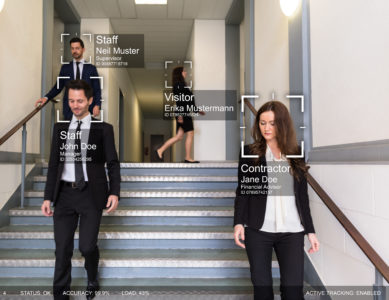

The multitrillion-dollar spending spree on AI has spread to the developing world. It is driven in part by a philosophy known in some academic circles as AI decolonization. The idea is simple. Foreign powers once extracted resources such as oil from colonies, offering minimal benefits to the locals. Today, developing nations aim to ensure that the AI boom enriches more than just Silicon Valley. Regulations effectively require tech companies such as Google and Meta to process local data domestically. That pushes companies to build or rent data facilities onshore instead of relying on global infrastructure. These investments add up to billions of dollars and create jobs that foster national talent, or so developing nations hope.

AI decolonization is a twist on data sovereignty, a concept that gained traction after Edward Snowden revealed that American tech companies cooperated with U.S. government surveillance of foreign leaders. The European Union in 2018 pioneered data-protection laws that other nations have since mimicked.

Regulations vary by country and industry, but the principle is this: If a developing-nation bank wants an American tech giant to store customer data and analyze it with AI, the bank must hire a company with domestically located servers… Nvidia Chief Executive Jensen Huang championed “sovereign AI” during a visit to Jakarta in 2024

“No country can afford to have its natural resource—the data of its people—be extracted, transformed into intelligence and then imported back into the country,” Huang said…

Excerpt from Stu Woo, It’s Not Just Rich Countries. Tech’s Trillion-Dollar Bet on AI Is Everywhere, WSJ, Oct. 26, 2025