Amid ongoing fears over TikTok, Chinese generative AI platform DeepSeek says it’s sending heaps of US user data straight to its home country, potentially setting the stage for greater scrutiny. The United States’ recent regulatory action against the Chinese-owned social video platform TikTok prompted mass migration to another Chinese app, the social platform “Rednote.” Now, a generative artificial intelligence platform from the Chinese developer DeepSeek is exploding in popularity, posing a potential threat to US AI dominance and offering the latest evidence that moratoriums like the TikTok ban will not stop Americans from using Chinese-owned digital services…In many ways, DeepSeek is likely sending more data back to China than TikTok has in recent years, since the social media company moved to US cloud hosting to try to deflect US security concerns “It shouldn’t take a panic over Chinese AI to remind people that most companies set the terms for how they use your private data” says John Scott-Railton, a senior researcher at the University of Toronto’s Citizen Lab. “And that when you use their services, you’re doing work for them, not the other way around.”To be clear, DeepSeek is sending your data to China. The English-language DeepSeek privacy policy, which lays out how the company handles user data, is unequivocal: “We store the information we collect in secure servers located in the People’s Republic of China.”

In other words, all the conversations and questions you send to DeepSeek, along with the answers that it generates, are being sent to China or can be. DeepSeek’s privacy policies also outline the information it collects about you, which falls into three sweeping categories: information that you share with DeepSeek, information that it automatically collects, and information that it can get from other source…DeepSeek is largely free… “So what do we pay with? What… do we usually pay with: data, knowledge, content, information.” …

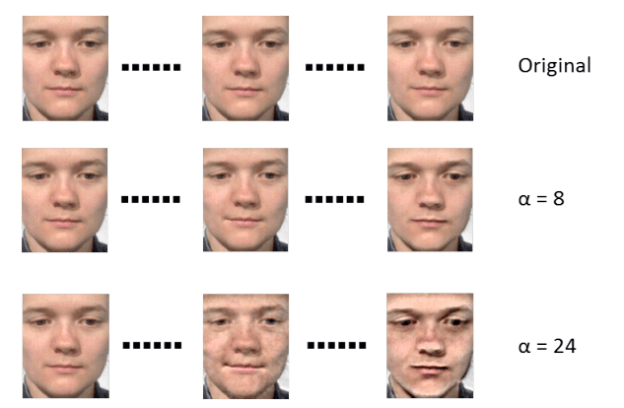

As with all digital platforms—from websites to apps—there can also be a large amount of data that is collected automatically and silently when you use the services. DeepSeek says it will collect information about what device you are using, your operating system, IP address, and information such as crash reports. It can also record your “keystroke patterns or rhythm.”…

Excerpts from John Scott-Railton, DeepSeek’s Popular AI App Is Explicitly Sending US Data to China, Wired, Jan. 27, 2025